Open source is reshaping the way teams build and deploy AI tools, and OpenWebUI is a prime example. It’s a sleek, self-hostable front-end for local and remote LLMs like Ollama, designed to give developers a clean and intuitive interface to interact with models like LLaMA, Mistral, and more.

In this guide, we’ll walk you through how to run OpenWebUI locally using Docker Compose — a quick and reliable setup that includes:

- ✅ OpenWebUI (frontend/backend)

- 🧠 Ollama (local LLM inference engine)

- 🗃️ PostgreSQL with pgvector (for vector storage)

- 📊 pgAdmin (for managing your database)

Let’s dive in.

🧱 Prerequisites

Before we get started, make sure you have:

- Docker and Docker Compose installed

- At least 8–16 GB RAM (especially for running LLMs locally via Ollama)

- Git or any method to pull and run the project locally

Step 1: Create Your docker-compose.yml

Here’s the full docker-compose.yml we’ll use to run all required services:

# docker-compose.yml

version: '3.8'

services:

openwebui:

image: ghcr.io/open-webui/open-webui:main

container_name: openwebui

ports:

- "3000:8080"

environment:

- DATABASE_URL=postgresql://openwebui:openwebui@db:5432/openwebui

- VECTOR_DB=pgvector

- PGVECTOR_DB_URL=postgresql://openwebui:openwebui@db:5432/openwebui

- OLLAMA_BASE_URL=http://ollama:11434

- CHUNK_SIZE=2500

- CHUNK_OVERLAP=500

volumes:

- openwebui-data:/app/backend/data

depends_on:

- db

- ollama

restart: unless-stopped

ollama:

image: ollama/ollama

container_name: ollama

ports:

- "11434:11434"

volumes:

- ollama-data:/root/.ollama

restart: unless-stopped

command: ["serve"]

db:

image: pgvector/pgvector:pg15

container_name: openwebui-db

environment:

- POSTGRES_USER=openwebui

- POSTGRES_PASSWORD=openwebui

- POSTGRES_DB=openwebui

ports:

- "5432:5432"

volumes:

- db-data:/var/lib/postgresql/data

restart: unless-stopped

pgadmin:

image: dpage/pgadmin4

container_name: pgadmin

environment:

- PGADMIN_DEFAULT_EMAIL=admin@domain.com

- PGADMIN_DEFAULT_PASSWORD=admin

ports:

- "8081:80"

volumes:

- pgadmin-data:/var/lib/pgadmin

depends_on:

- db

restart: unless-stopped

volumes:

openwebui-data:

db-data:

pgadmin-data:

ollama-data:

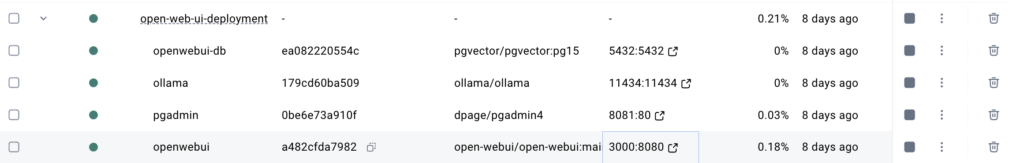

▶️ Step 2: Start Everything Up

To bring the entire stack up, just run:

docker-compose up -dThis command will:

- Launch the OpenWebUI interface on http://localhost:3000

- Expose pgAdmin at http://localhost:8081

- Start the Ollama inference server on port

11434 - Initialize a pgvector-enabled PostgreSQL instance for vector search support

🧠 Step 3: Load Your First Model (via Ollama)

Once Ollama is running, you can load a model like mistral or llama3:

ollama run mistral

Or pull it beforehand using:

ollama pull mistral

OpenWebUI will detect the Ollama server automatically via the OLLAMA_BASE_URL environment variable and allow you to interact with the model directly from the web interface.

📊 Step 4: Manage Your Vector Database

You can log into pgAdmin at http://localhost:8081 using:

- Email:

admin@domain.com - Password:

admin

Add a new server and connect it to the openwebui PostgreSQL database using:

- Host:

db - Port:

5432 - Username:

openwebui - Password:

openwebui

This is especially helpful if you want to inspect how OpenWebUI stores chunks, vectors, and embeddings in your database.

🛑 Stopping the Stack

To shut everything down, run:

bashCopyEditdocker-compose down

If you want to keep your data (vector store, models, config), don’t worry — all data is persisted in named Docker volumes.

✅ You’re All Set!

You now have a fully functional local AI assistant running on your machine, with:

- A sleek, open-source web UI for interacting with LLMs

- Support for local models via Ollama

- Full vector search and document chunking

- A PostgreSQL + pgvector database backend

- Admin tools via pgAdmin

Pro Tips

- Use

ollama listto view all downloaded models - Change the

CHUNK_SIZEandCHUNK_OVERLAPvalues in the environment to tune how your documents are split and stored - Mount custom folders inside the OpenWebUI volume for persistent document uploads

💬 Have Feedback?

We’d love to hear from you. If you’re using OpenWebUI internally or as part of your product stack, let us know how it’s going and what we can improve.